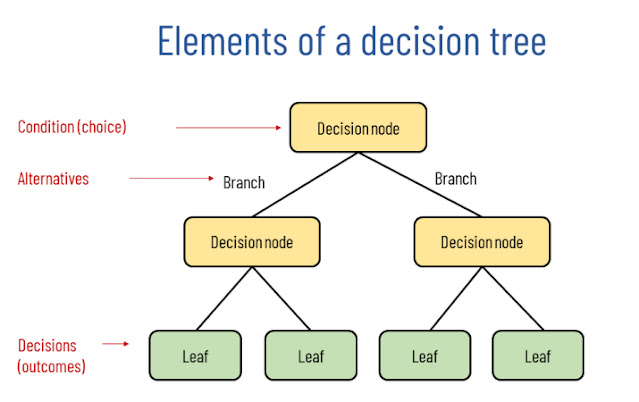

Decision Trees (DTs) are a non-parametric supervised learning method used for classification and regression. The goal is to create a model that predicts the value of a target variable by learning simple decision rules inferred from the data features. A tree can be seen as a piecewise constant approximation.

Why Non-Parametric?

Nonparametric machine learning algorithms are those which do not make specific assumptions about the type of the mapping function. They are prepared to choose any functional form from the training data, by not making assumptions.

Decision trees are based on a hierarchical structure, and they can be used to solve a wide variety of problems, including regression and classification tasks.

Hyper-parameters

Max_depth : It indicates how deep or downward the decision tree can be

The deeper the tree, more complex it would be and it would capture more information about the data as it will contain more splits

Limitation: Chances of overfitting in the case of more depth as it would be predicting well for the train data but it may fail for the generalise findings in the new data. Training error may be less but the testing error would be high in this case.

Min_samples_split: the minimum number of samples required to split an internal node

We can either denote a number or a fraction to denote a certain percentage of samples in an internal node

Min_samples_leaf: Minimum number of samples required to be at a leaf node (last node which can’t be split further)

Max_features: it considers the number of features to consider when looking for a best split

We can either specify a number to denote the max number of features or fraction to denote a percentage of features to consider while making a split

No comments

Post a Comment